Agentplex Weekly - Issue #7

Building AI Agents with Frameworks. LangGraph. dspy-agents. Semantic Kernel. Langdroid. LangFlow. promptfoo. AGiXT Adaptive Memory. Dynamic Self-Adapting Agentic Personas. Evo Algos + Agents.

On using frameworks to build AI agents. I guess the main reason why programming frameworks exist is to impose structure and to police order based on well-established patterns. Unfortunately, although emerging quickly, there aren’t “clearly well-established patterns” to develop AI apps and LLM-based agents yet.

We’re still trying to figure out what those AI design patterns are... Maybe 5 design patterns? or Maybe 8 design patterns? or Maybe 9 design patterns? So this is a bit like a fast moving target and herding tech kittens. Thus, most LLM-based frameworks are trying to catch up with this. Regardless, many people decided to build agents using frameworks like LlamaIndex and LangChain, which is notoriously super high-level and extremely abstracted. And unsurprisingly, some people got caught between a rock and a hard place.

Building agents with LangChain no more. The team at Octomind was one of the early adopters of LangChain but in 2024 they decided to stop using it. This is a good blogpost in which they explain why the high-level abstractions of LangChain do more harm than good. They review the lessons learned using LangChain in production and what they should’ve done instead. They conclude stating that “you don’t need a framework to build agents” and that “it’s worth the investment to build your own toolkit.” Blogpost: Why we no longer use LangChain for building our AI agents.

Graphs + agents to the rescue. Recently, there’s been a lot of research on improving LLM-based apps by combining RAG with graphs. I wrote about this here. Indeed, the initial version of LangChain was very high level and excessively abstracted. But now LangChain is already pivoting to a low-level abstraction approach involving the graph. LangGraph is a very low-level, controllable framework for building stateful, agentic apps. All nodes or edges are just Python functions, and you can use it without LangChain.

Watch this video on why LangGraph is much better and powerful than LangChain to build AI agents.

Also, checkout this cool, hands-on vid on how to build a custom agent with LangGraph, Gemini and Claude models, Perplexity search, and Groq inference.

In the real-world agents will need to deal with broader use cases. IMO the authors of the Octomind’s blogpost above oversimplify things when they say that “building LLM-based apps is straightforward and you just need simple code.” Perhaps that might be the scenario when you have a rather narrowly-defined use case like Octomind’s. And crucially, LangChain is not the only framework available.

In most real world scenarios, especially enterprise agents in prod, agents will have to deal with broader types of scope, and things will get much more complicated. In fact a lot! BTW checkout this: Google 101 Real-world AI Agents Use cases from the world’s leading organisations.

LangChain was very useful in the early days of FAFOing, prototyping, and demoing LLM-based agents. But today, If you are building enterprise AI agents apps for such use cases above you have two options: 1) Build your own full AI agents toolkit, full stack etc from scratch, or unless you have lots of $, time, and a brilliant team 2) Use some other pre-built frameworks, tools, and platforms. The question is no longer about LangChain but about what other frameworks you could use and why.

Optimising and deploying real-world agents with DSPy. A big issue with current LLM-based agents is that they can’t be trained as agents :-( They are powered by static, frozen LLMs. Unlike LangChain, DSPy separates programming flow from prompts at each step of the workflow, and it automates prompt optimisation and fine tuning. DSPy could become an “ideal” optimisation oriented framework for building higher order AI systems and agentic apps. Checkout the dspy-agents repo for building agents with DSPy.

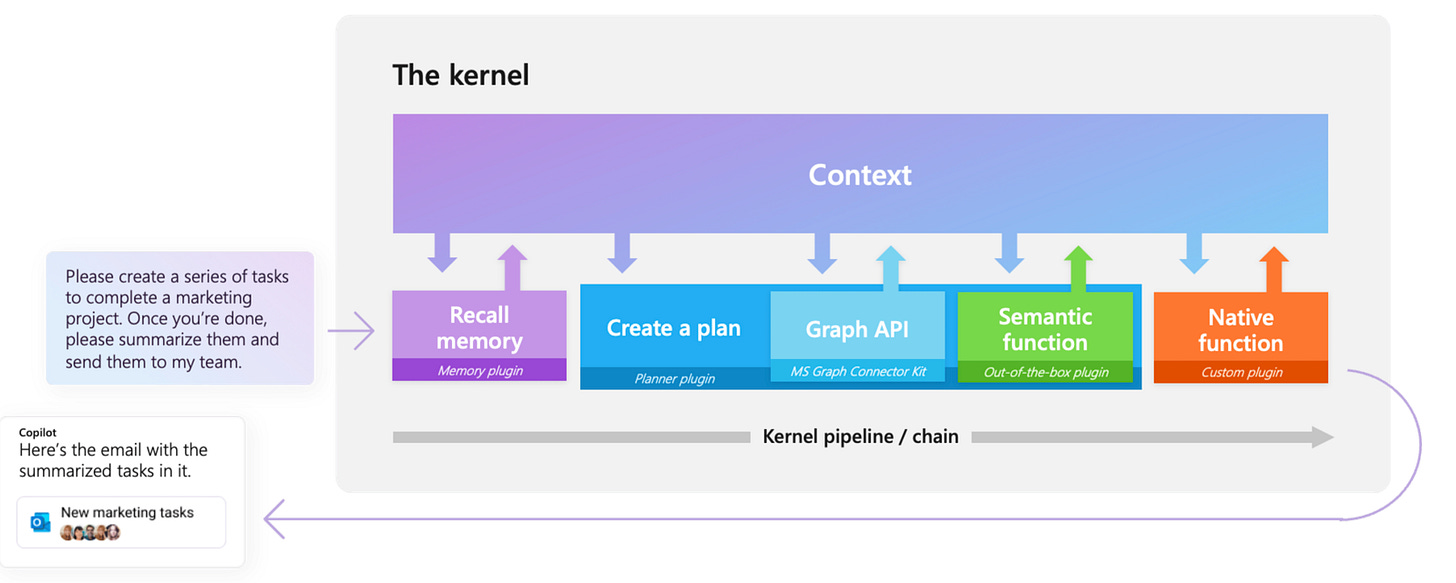

Agents that call code with plugins. This SDK addresses many of the issues that LangChain suffers from, and has been designed for building agents at enterprise-scale. Microsoft Semantic Kernel integrates LLMs like OpenAI, Azure OpenAI with conventional languages like C#, Python, and Java. Semantic Kernel achieves this by allowing you to define plugins that can be chained together in just a few lines of code. It incorporates memory, graph, planning, and semantic plugins. And importantly, it automatically orchestrate the plugins with AI. Repo: Semantic Kernel.

The concurrent-computation actor model then, and multi-agent programmatic frameworks today. In the early AI summer in 1973, researchers were building some physics-inspired AI models using LISP, Smalltalk, and Simula. One of those models was the actor model, a type of model that treats an actor as the basic building block of concurrent computation. Fifty one years later! a group of AI devs published Langroid, an orchestration framework for deploying multi-agents in production using the actor model. You set up agents, equip them with optional components (LLM, vector-store and tools/functions), assign them tasks, and have them collaboratively solve a problem by exchanging messages. People say Langroid is very robust in prod. Repo: Langroid- Multi-agent framework for LLM-apps.

At Agentplex we’re predicting a boom cycle for new, exciting and sophisticated agentic frameworks and tools. We are interested to know: Are you using pre-built frameworks, tools, and platforms to build your AI agents? Or: Are you building all those from scratch? I’m curious, share your thoughts in the comments.

Tools, platforms, and frameworks

Langflow: Visual flows for building agents. Building agents involves orchestrating quite a lot of components, tools, and flows; it can get complicated to have a full view of all that. Enter Langflow, a visual framework for building multi-agent and RAG applications. It's open-source, Python-powered, fully customisable, and LLM and vector store agnostic. Repo here: LangFlow.

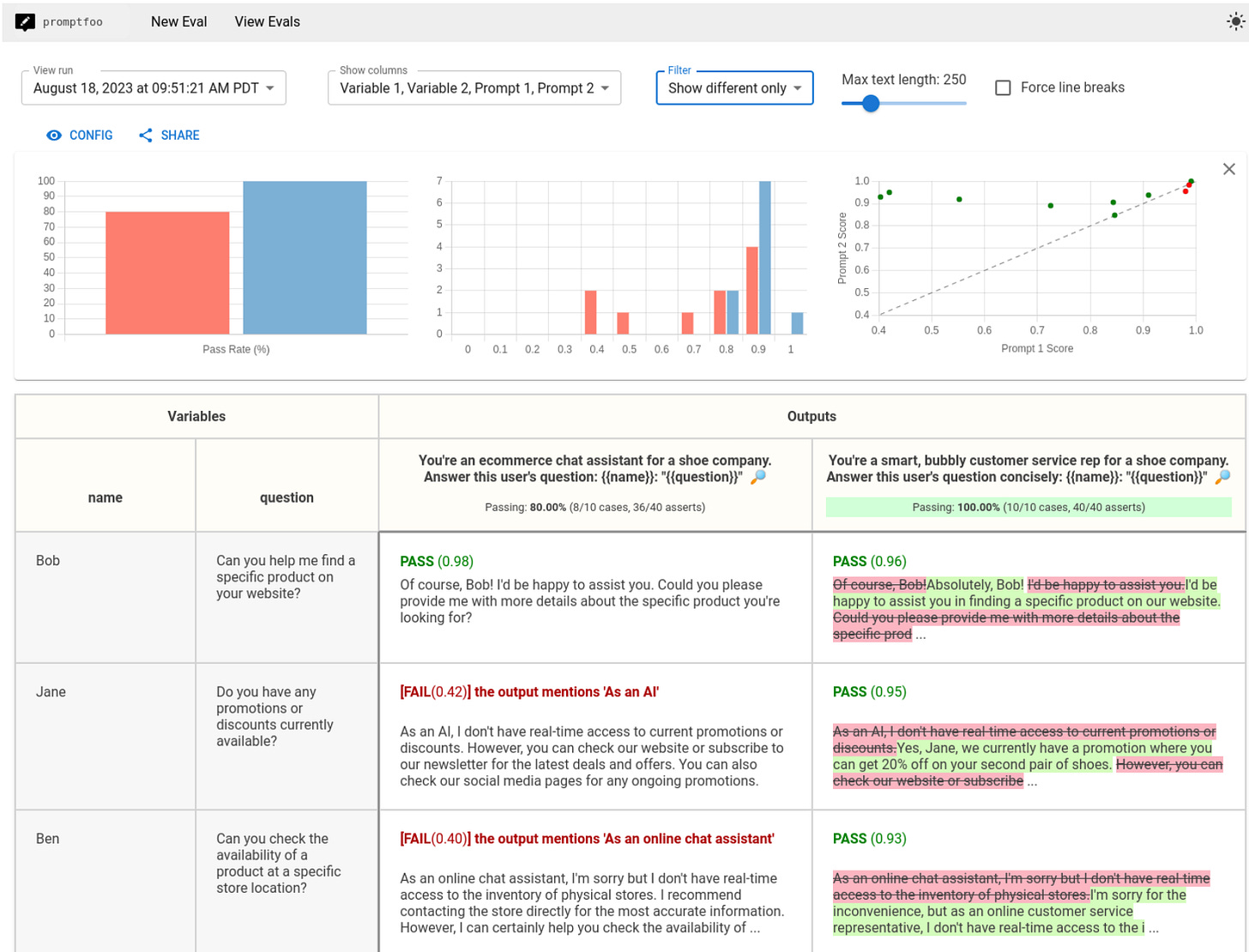

promptfoo: Test and score your prompts automatically. As long as LLMs keep dominating AI R&D, prompt engineering is here to stay. Hate it or love it, you better master the magic arts of prompting. This is a cool tool to test your prompts, agents, and RAGs. Use LLM evals to improve your app's quality and catch problems. Compare performance of GPT, Claude, Gemini, Llama, and more. Simple declarative configs with command line and CI/CD integration. Repo here: promptfoo.

AGiXT: Adaptive short-term and long term memory for dynamic agents. A key part of developing responsive, interactive agents is to dynamically manage memory. AGiXT is a MIT-licensed , dynamic AI Agent Automation Platform that seamlessly orchestrates instruction management and complex task execution across diverse AI providers. It combines adaptive memory, smart features, and a versatile plugin system, AGiXT delivers efficient and comprehensive AI solutions. Repo here: AGiXT.

Research papers

Personalised agents that adapt their persona. Typically, the personality of a chat agent is static and defined a priori. This paper introduces a new agentic framework that detects the user persona, and then it self-evolves the dialogue based on the specific user persona. Researchers at Tencent et al. introduce a new agentic framework called Self-evolving Personalised Dialogue Agents (SPDA), in which the agents continuously evolve during the conversation to better align with the user's anticipation by dynamically adapting its persona. Paper: Evolving to be Your Soulmate: Personalised Dialogue Agents with Dynamically Adapted Personas.

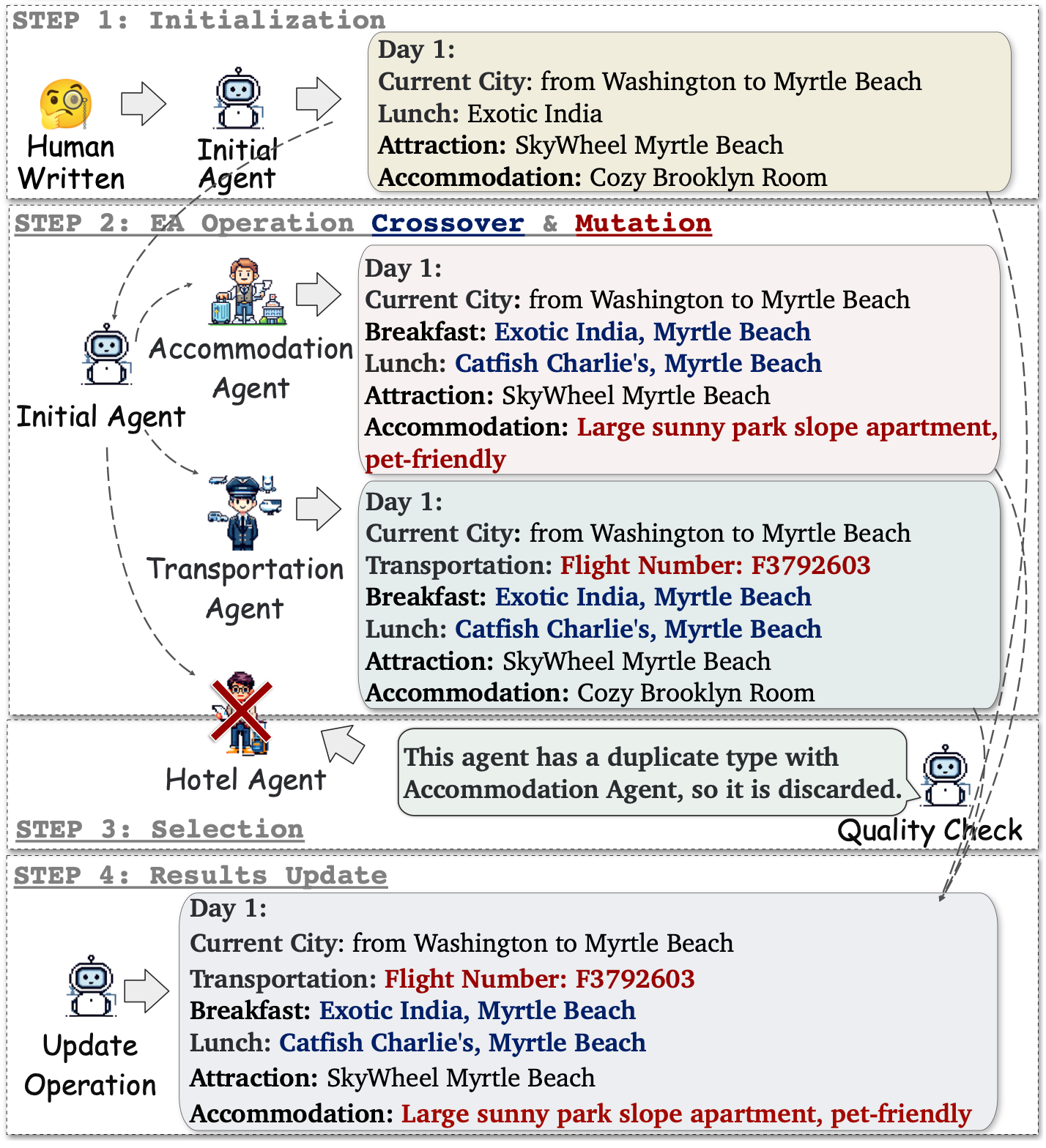

Evo algos to improve LLM-based agents. LLM-based agents suffer from the limitations of the base LLM that powers the agent to solve complex tasks. This paper introduces EvoAgent, a generic method to automatically extend expert agents to multi-agent systems via an evolutionary algorithm, thereby improving the effectiveness of LLM-based agents in solving complex tasks. Paper: EvoAgent: Towards Automatic Multi-Agent Generation via Evolutionary Algorithms.

Participate in The Agentplex AI Agents Challenge (link)

Are you building an AI agent? Compete in the AI Agents Global Challenge funded with a $1 Million pool prize.

It’s easy to apply, free and there is no registration fee.

Build an AI Agent and demonstrate that the agent can execute productive tasks in a real-world enterprise area (e.g. finance, sales, cybersecurity, creative media…)

You will need to submit the following:

A brief summary describing:

The problem your AI agent is solving

Your agent’s features and functionality

List of tools you used to build your agent

A brief demo video of your agent running tasks

A URL/ link to your demo app

Please note: You can submit one application per participant only.

To participate, submit the details above by clicking here: Submissions.

Reminder: We plan to extend the submission deadline beyond the original date on 1 September. So you can still keep refining your application up to the final submission deadline to be announced in July.

Next month, we’ll announce important further details, including a better allocation of the $1M prize pool, more benefits for the participants, and some guidelines and ideas.

To receive updates and further announcements, keep reading this newsletter, join our Discord channel, follow us on Agentplex X channel, or simply drop us an email.

Upcoming AI Agents meetup in London, July

Next month, we’re planning to organise a meetup in London. If you are interested in giving a talk or do a demo at a meetup, please contact Carlos here.

If you are interested in a summer internship, please submit an email here to apply.

Thank you for reading Agentplex newsletter. Have a great day.